I am dusting off an old post I wrote more than two years ago, and while it shows my lazyness, I am doing it in the belief that the ideas I raised back then may soon get an answer … albeit not exactly the way I imagined. And interestingly enough, just about every company I mentioned may have a role in it. Or not. After all, it’s just speculation. So here’s the old post:

Google’s next killer app will be an accounting system, speculates Read/WriteWeb. While I am doubtful, I enthusiastically agree, it could be the next killer app; in fact don’t stop there, why not add CRM, Procurement, Inventory, HR?

The though of Google moving into business process / transactional system is not entirely new: early this year Nick Carr speculated that Google should buy Intuit, soon to be followed by Phil Wainewright and others: Perhaps Google will buy Salesforce.com after all. My take was that it made sense for Google to enter this space, but it did not need to buy an overpriced heavyweight, rather acquire a small company with a good all-in-one product:

Yet unlikely as it sounds the deal would make perfect sense. Google clearly aspires to be a significant player in the enterprise space, and the SMB market is a good stepping stone, in fact more than that, a lucrative market in itself. Bits and pieces in Google’s growing arsenal: Apps for Your Domain, JotSpot, Docs and Sheets …recently there was some speculation that Google might jump into another acquisition (ThinkFree? Zoho?) to be able to offer a more tightly integrated Office. Well, why stop at “Office”, why not go for a complete business solution, offering both the business/transactional system as well as an online office, complemented by a wiki? Such an offering combined with Google’s robust infrastructure could very well be the killer package for the SMB space catapulting Google to the position of dominant small business system provider.

This is probably a good time to disclose that I am an Advisor to a Google competitor, Zoho, yet I am cheering for Google to enter this market. More than a year ago I wrote a highly speculative piece: From Office Suite to Business Suite:

How about transactional business systems? Zoho has a CRM solution – big deal, one might say, the market is saturated with CRM solutions. However, what Zoho has here goes way beyond the scope of traditional CRM: they support Sales Order Management, Procurement, Inventory Management, Invoicing – to this ex-ERP guy it appears Zoho has the makings of a CRM+ERP solution, under the disguise of the CRM label.

Think about it. All they need is the addition Accounting, and Zoho can come up with an unparalleled Small Business Suite, which includes the productivity suite (what we now consider the Office Suite) and all process-driven, transactional systems: something like NetSuite + Microsoft, targeted at SMB’s.

The difficulty for Zoho and other smaller players will be on the Marketing / Sales side. Many of us, SaaS-pundits believe the major shift SaaS brings about isn’t just in delivery/support, but in the way we can reach the “long tail of the market” cost-efficiently, via the Internet. The web-customer is informed, comes to you site, tries the products then buys – or leaves. There’s no room (or budget) for extended sales cycle, site visits, customer lunches, the typical dog-and-pony show. This pull-model seems to be working for smaller services, like Charlie Wood’s Spanning Sync:

So far the model looks to be working. We have yet to spend our first advertising dollar and yet we’re on track to have 10,000 paying subscribers by Thanksgiving.

It may also work for lightweight Enterprise Software:

It’s about customers wanting easy to use, practical, easy to install (or hosted) software that is far less expensive and that does not entail an arduous, painful purchasing process. It’s should be simple, straightforward and easy to buy.

The company, whose President I’ve just quoted, Atlassian, is the market leader in their space, listing the top Fortune 500 as their customers, yet they still have no sales force whatsoever.

However, when it comes to business process software, we’re just too damn conditioned to expect cajoling, hand-holding… the pull-model does not quite seem to work. Salesforce.com, the “granddaddy” of SaaS has a very traditional enterprise sales army, and even NetSuite, targeting the SMB market came to similar conclusions. Says CEO Zach Nelson:

NetSuite, which also offers free trials, takes, on average, 60 days to close a deal and might run three to five demonstrations of the program before customers are convinced.

European All-in-One SaaS provider 24SevenOffice, which caters for the VSB (Very Small Business) market also sees a hybrid model: automated web-sales for 1-5 employee businesses, but above that they often get involved in some pre-sales consulting, hand-holding. Of course I can quote the opposite: WinWeb’s service is bought, not sold, and so is Zoho CRM. But this model is far from universal.

What happens if Google enters this market? If anyone, they have the clout to create/expand market, change customer behavior. Critics of Google’s Enterprise plans cite their poor support level, and call on them to essentially change their DNA, or fail in the Enterprise market. Well, I say, Google, don’t try to change, take advantage of who you are, and cater for the right market. As consumers we all (?) use Google services – they are great, when they work, **** when they don’t. Service is non-existent – but we’re used to it. Google is a faceless algorithm, not people, and we know that – adjusted our expectations.

Whether it’s Search, Gmail, Docs, Spreadsheets, Wiki, Accounting, CRM, when it comes from Google, we’re conditioned to try-and-buy, without any babysitting. Small businesses don’t subscribe to Gartner, don’t hire Accenture for a feasibility study: their buying decision is very much a consumer-style process. Read a few reviews (ZDNet, not Gartner), test, decide and buy.

The way we’ll all consume software as a service some day.

(Cross-posted @ CloudAve )

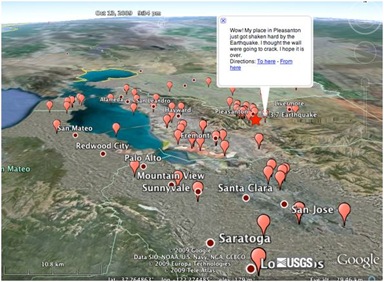

It’s less than two weeks ago that

It’s less than two weeks ago that

I don’t claim to be an expert in the area, so this is more a quick pointer then a real post. Well, too short for a post, too long for a tweet:-)

I don’t claim to be an expert in the area, so this is more a quick pointer then a real post. Well, too short for a post, too long for a tweet:-) You can do it, too at zero cost:-) Or if you want to turn pro level, you may want to check out

You can do it, too at zero cost:-) Or if you want to turn pro level, you may want to check out

Recent Comments